During the last few posts we put together a SQL server, Connection Server, Linux desktop, setup certificates, and created a working Manual working desktop pool.. A Basic working deployment of Horizon View that’s good for kicking the tires but very labour intensive to maintain in production.

In a production environment there is much more to consider than just what we’ve thrown together. Availability, security, logging, monitoring, alerting, desktop pool. Desktop OS, budget, to name a few.

Before jumping in and creating an awesome design you’ll always want to find out exactly what the requirements are. “Because” is not an answer. For example, you should be asking questions along the lines of:

- What do the different stake holders think they are getting?

- What does your network look like?

- What kind of security do you have between your networks and/or VLANs

- Is redundancy and resilience a factor to consider, and yes, they can be different things.

- Do you have approved Windows or Linux builds?

- Patching schedule?

- Do you have a standard user base, or is this intended for users with differing requirements? e.g. dev, eng, admin?

- Does this service need to be available externally, or is it an internal service only?

- Have you met with security?

- Apart from the requirements, have you evaluated the risks and constraints?

- In the absence of concrete answers have you made your clients/manager aware of any assumptions you’ve made? e.g. “The project plan assumes that the current in server disk controllers will be replaced with HPE P416ie controllers for VSAN compliance.”

When working out the Requirements, constraints, risks, and assumptions be specific. Ambiguous or open ended answers will lead to scope creep and make your job more difficult.

However for the next set of posts we’ll be going through and fleshing out the environment with these (very) high level requirements

- n+1 redundancy of the VDI deployment.

- External Access

- Load balanced (If possible)

- Two different types of users. Dev and technical admins

- Two different desktop OS’s available.

- Profile to persist between sessions.

- Security – no copy and paste, 2FA, logging, only applicable ports open between VLAN’s

- Monitoring

This is more that enough to get us going back and asking many, many questions but for now we’ll pretend that most of them have been answered.

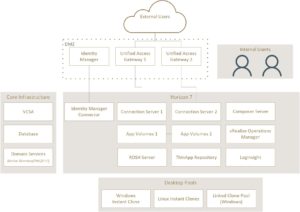

So that we don’t go off piste too much I’ll be mostly sticking to a stripped down version of VMware’s reference Architecture for the mobility suite that can be found here but slightly modified. The diagram below is partially from the linked page and modified to fit into my lab (hopefully). I’ll also make sure I reference any other blogs that i pull info from.

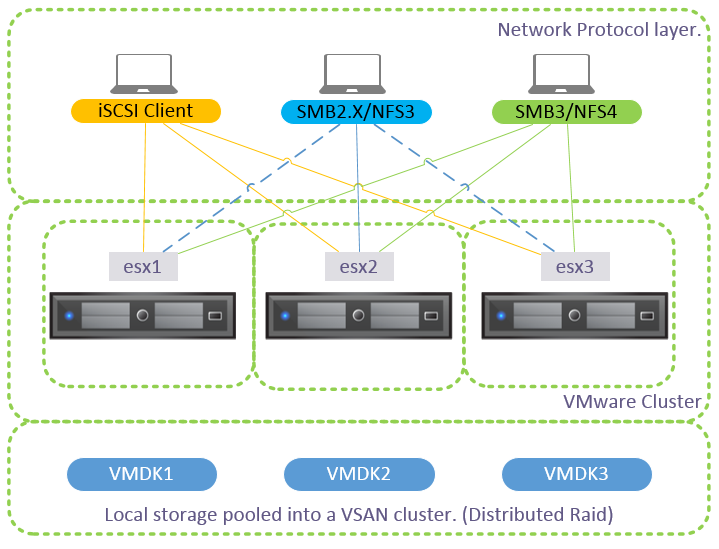

P.S. For the ESXi servers, I’ll be using William Lam’s most excellent ESXi servers that can be deployed via OVA onto either ESXi or Workstation/Fusion